AI-Enhanced Assessment CRUD

Designing a Scalable, Human-in-the-Loop System for AI-Generated Assessments

UX CASE STUDY

BEFORE

AFTER

Overview

Udacity’s enterprise business relied heavily on third-party assessments and manual, engineering-driven workflows to create and manage in-house assessments. As demand increased—and AI-generated content became viable—these processes failed to scale.

I led the design of an AI-enhanced assessment creation and management system within Udacity’s enterprise management platform, enabling internal teams to quickly create, review, edit, and maintain assessments—using both manual and AI-generated questions—under significant time and technical constraints.

Team

1 Product Manager, 2 Engineers, 1 Product Designer (Me)

Time

30 days

Tools

Figma, ChatGPT, Lovable, Gemini, Jira, confluence, G suite

All collaboration was conducted remotely.

Audience

Udacity Staff

Platform

Web

Key Stakeholders

Sales Solution Architects, Customer Success Operations, Customer Success Managers, Content

Why This Matters

As organizations shift toward skills-based hiring and upskilling, assessments become critical infrastructure—not just content. Yet most learning platforms struggle to scale assessment creation due to heavy engineering dependency, slow workflows, and limited quality control.

This project addressed a foundational problem: how to safely and efficiently scale assessment creation using AI without sacrificing quality, trust, or operational control.

By designing a flexible assessment CRUD system with built-in human review, this work:

Reduced reliance on engineering for day-to-day assessment creation

Enabled non-technical teams to operate independently at scale

Established a durable foundation for AI-generated assessments across multiple future use cases

Directly supported enterprise contracts and revenue timelines

At scale, this system shifts assessment creation from a bottleneck into a strategic capability, enabling Udacity to move faster as AI-driven content generation becomes table stakes.

The ChallengeSeveral systemic issues limited Udacity’s ability to scale assessments:

1. Engineering Dependency

Assessments and questions were manually created or configured by engineers, creating bottlenecks and slowing response to customer needs.

2. Non-Scalable Workflows

Non-technical teams (Customer Success, Content, Ops) lacked tools to independently create or manage assessments, resulting in operational delays.

3. No Quality Control Framework

There was no consistent way to evaluate question quality or performance, limiting confidence in assessment accuracy and fairness.

4. AI Without Governance

As the team explored AI-generated questions, there was no structured human-review process to ensure pedagogical quality, relevance, or trustworthiness.

At the same time, contractual commitments required delivering a functional solution in weeks, not months.

Design ObjectiveDesign a scalable assessment creation system that:

Enables full CRUD functionality for assessments and questions

Supports both manual and AI-generated content

Empowers non-technical staff to operate independently

Introduces human-in-the-loop review for AI-generated questions

Can be delivered quickly within legacy platform constraints

My Role

Lead Product Designer (IC Lead)

I owned the end-to-end UX strategy and execution for this initiative.

Responsibilities

Experience definition under tight constraints

Workflow and systems design

AI-assisted ideation and prototyping

UX/UI design within an existing enterprise platform

Cross-functional alignment and handoff

Constraints & Scope

This project was intentionally execution-heavy and time-boxed.

Timeline

~30 days end-to-end

Key Constraints

Existing legacy assessment experience partially built

New AI APIs and databases under active development

Minimal time for foundational refactors

Q3–Q4 enterprise contracts dependent on delivery

Scope Expansion

Originally focused on AI-driven question creation, early discovery revealed the core assessment experience was incomplete. To support AI responsibly, we expanded scope to design foundational assessment CRUD—ensuring both manual and AI-generated workflows could scale.

This decision traded visual polish for long-term system viability.

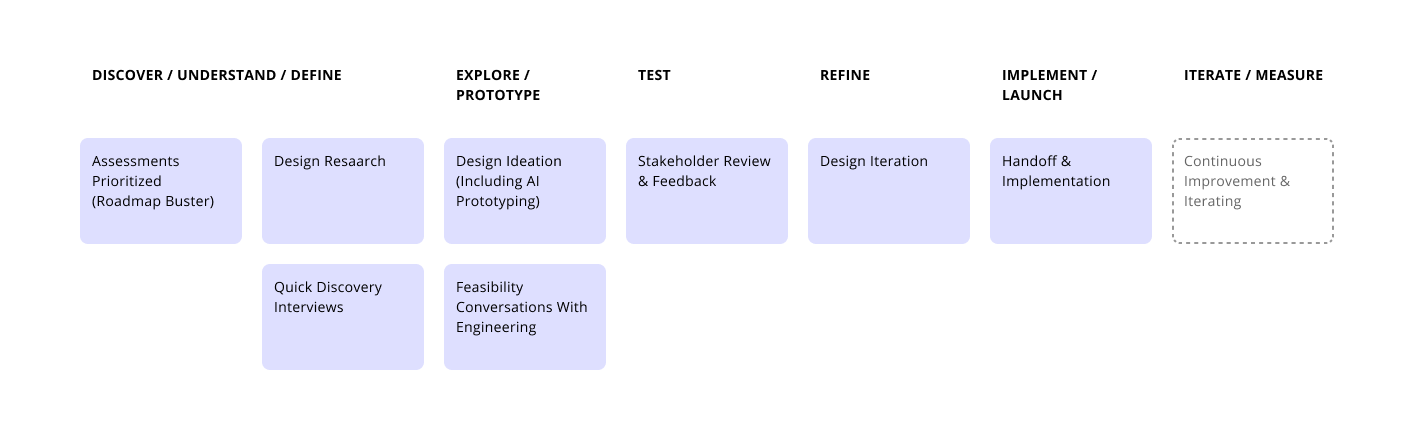

Approach

1. Rapid System Understanding

Audited the existing assessment experience and internal workflows

Reviewed an engineering-built Python prototype for AI-generated questions

Partnered with engineers to understand backend constraints and data models

2. Experience Principles

Non-technical users should operate independently

AI should accelerate creation, not bypass human judgment

CRUD workflows must support iteration, not just creation

The system must be extensible to future assessment types

3. AI-Enhanced Ideation

I used AI tools to:

Rapidly explore workflow variations

Generate UI and copy concepts

Stress-test edge cases in AI-generated content review

This allowed faster convergence while maintaining design rigor.

The Solution

I designed a flexible assessment management system embedded within Udacity’s enterprise platform.

Core Capabilities

Assessment CRUD

Create, edit, publish, and manage assessments without engineering support

Question Management

Add, edit, replace, and remove questions

Support both manual and AI-generated content

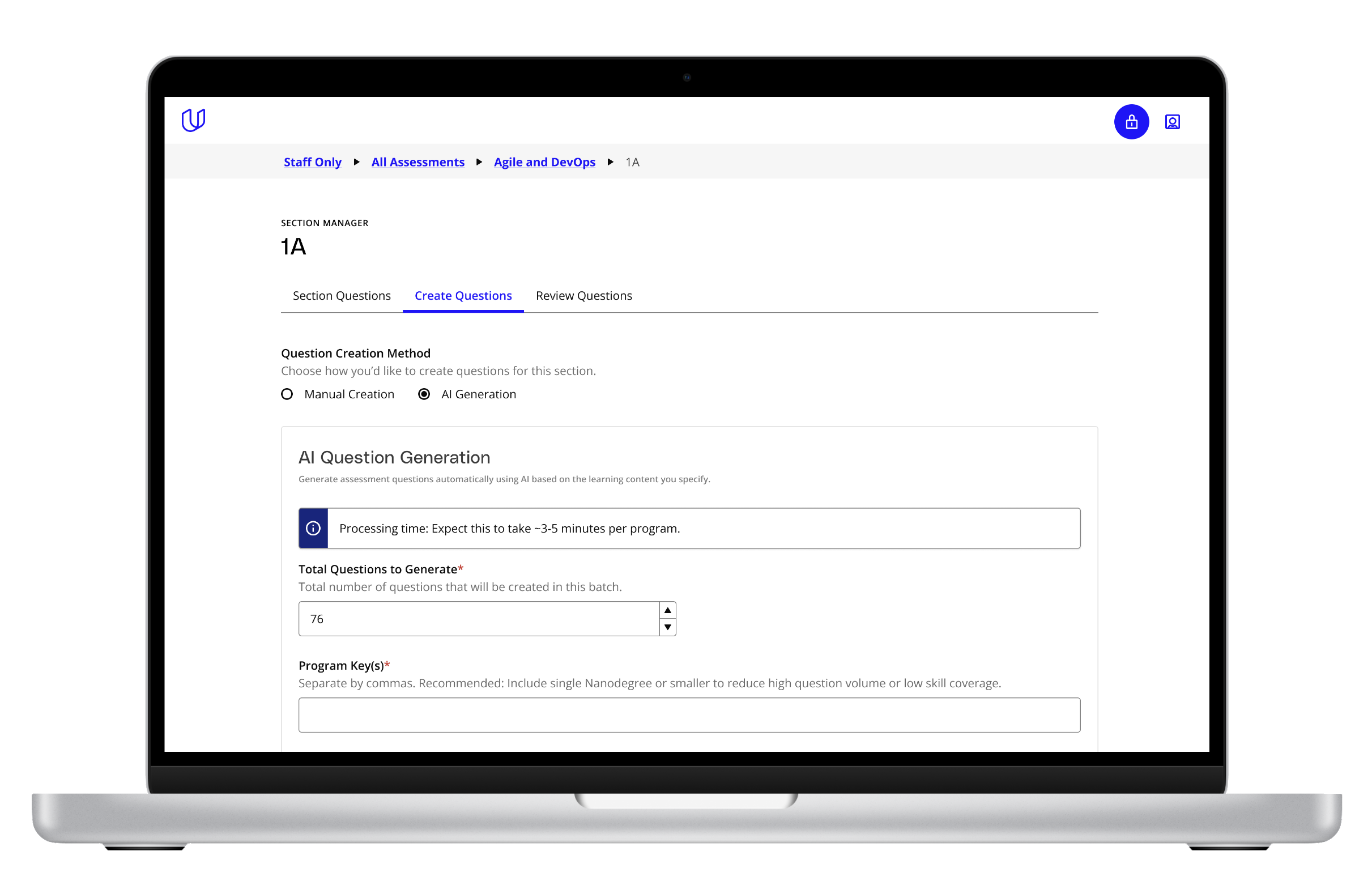

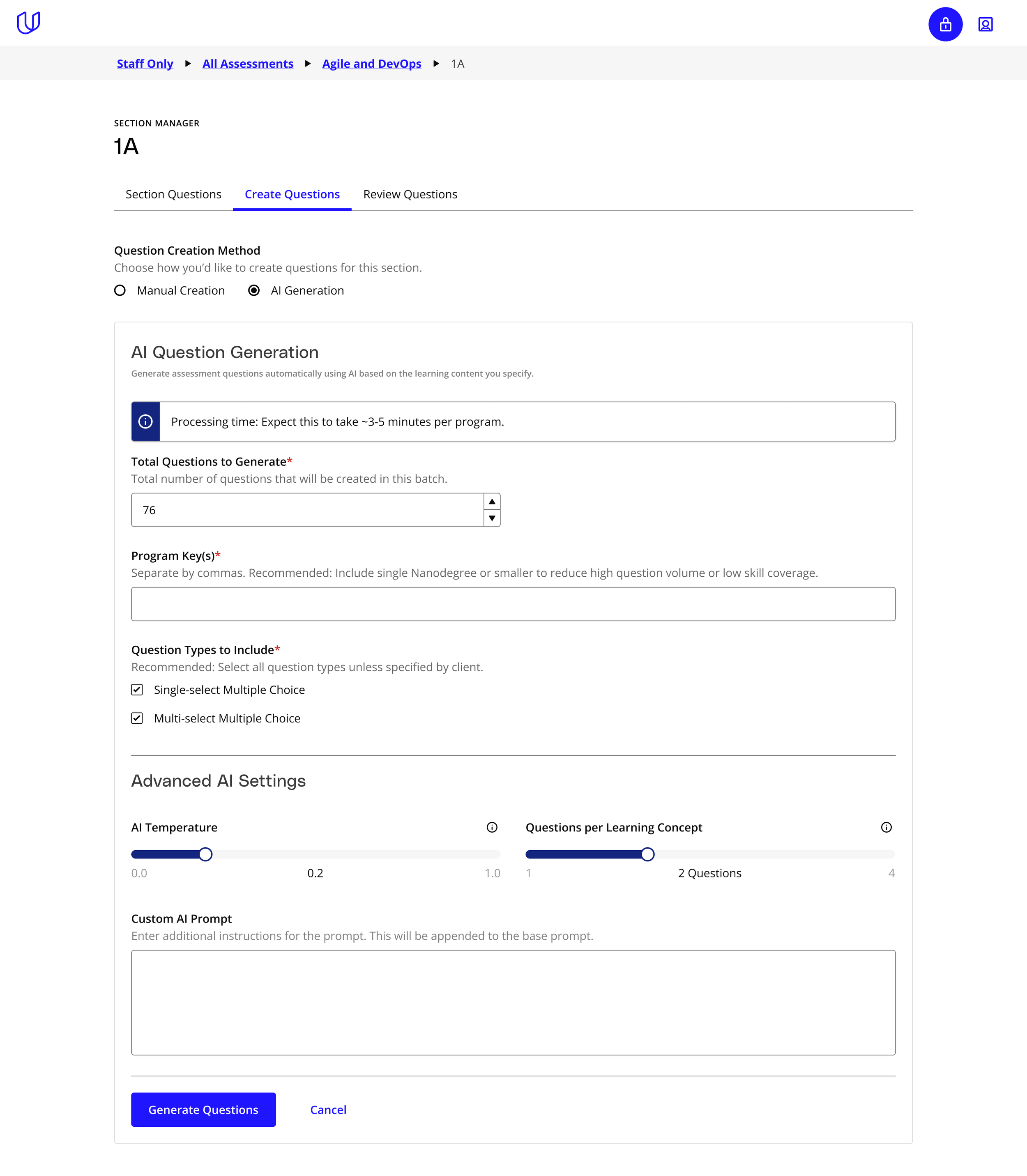

AI-Generated Question Flow

Guided AI prompt inputs

Clear distinction between AI-generated and approved content

Human review and approval before questions become active

Quality & Control

Structured review points for AI-generated questions

Ability to iterate on low-performing or low-quality content

This approach balanced speed with governance—critical for trust in AI-generated assessments.

Lovable ideation example screens

Impact

Before

Assessments manually created by engineers

No scalable workflow for updates or iteration

Limited visibility or control for non-technical teams

After

Fully functional assessment and question CRUD

AI-generated and manual workflows supported

Non-technical teams enabled to operate independently

Business Impact

Unblocked enterprise assessment contracts for Q3–Q4

Reduced operational and engineering load

Established a scalable foundation for AI-generated assessment content

Reflection

This project required making intentional tradeoffs:

Speed over visual refinement

System integrity over incremental feature delivery

Governance over unchecked AI automation

The outcome was not a polished surface feature, but a durable internal system—one designed to evolve as AI capabilities mature.

More importantly, it demonstrated how AI can be responsibly integrated into enterprise workflows when paired with strong UX and human oversight.

Introduce your brand

Take a minute to write an introduction that is short, sweet, and to the point.

Make it stand out.

It all begins with an idea. Maybe you want to launch a business. Maybe you want to turn a hobby into something more. Or maybe you have a creative project to share with the world. Whatever it is, the way you tell your story online can make all the difference.