AI-Enhanced Assessment CRUD

Experience Design to enable and accelerate AI-generated assessment creation in Udacity’s enterprise management platform

CASE STUDY

BEFORE

AFTER

Background

Udacity is an online learning platform that provides courses and Nanodegree programs focused on technology skills for career advancement for individuals and organizations. It offers a structured, project-based learning experience with content co-developed with industry leaders, covering fields like AI, data science, and programming. Students can learn at their own pace through short videos, quizzes, and problem sets, with the ability to receive career support like resume reviews and mentorship.

Udacity's enterprise offering provides tailored, scalable talent transformation solutions focusing on in-demand digital technologies like AI, data science, and cybersecurity. Designed for organizations of all sizes (from small teams to large corporations), these programs aim to upskill workforces, foster innovation, and drive business outcomes. Enterprise customers can utilize features such as customized learning paths, hands-on projects, assessments, mentor support, and analytics & management workflows via the Udacity Management Platform to drive learning and upskilling among their employees.

Client

Udacity Enterprise “Skills” Team

Duration

30 days

Tools

Figma, ChatGPT, Lovable, Gemini, Jira, confluence, G suite

Problems & Purpose

Problems:

Staff needed a faster and more efficient way to create and manage assessments, as the existing process was slow, manual, and not scalable.

Non-technical staff lacked the ability to independently create assessments, resulting in bottlenecks and operational delays.

There was no reliable way to measure or evaluate the quality of assessment questions, limiting confidence in the accuracy and fairness of the assessments.

As the team explored AI-generated assessment content, we needed a structured human-review process to approve or reject AI-generated questions and ensure they met quality and pedagogical standards.

Goals:

reduce reliance on engineering to configure or enable an assessment

Users & Audience

Key Users: Udacity internal staff that support enterprise customers that have purchased Workera Assessments and/or Learning Plans with assessments. Also, Udacity team members that support assessment creation and management-related tasks:

Customer Success Operations

Customer Success Managers

Content Managers

Roles & Responsibilities

My Role: Lead Product Designer: design research, AI prototype & ideation, UX/UI design

Team: “Skills” Team; 1 Product Manager, 1 Lead Engineer, 1 Backend Engineer, 1 Frontend Engineer

Stakeholders: Sales, Customer Success Ops, Customer Success, Content, Key Customers with pending renewals

All collaboration was conducted remotely.

Scope & Constraints

Timeline: 3-4 weeks (very quick turnaround)

Scope:

Enable full Assessment CRUD functionality within the existing management platform.

Integrate learnings from an engineering-built Python prototype for AI-generated questions into the production workflow.

Collaborate with engineering to understand backend limitations, including legacy platform constraints and new API/database supporting AI-generated items.

Original scope focused on introduction of an AI-driven question creation flow; however, discovery revealed that the existing assessment creation experience was incomplete. We expanded scope to build the core assessment CRUD workflow so it could serve as the foundation for both manual and AI-generated creation.

This led to necessary scope adjustments to ensure both legacy manual workflows and new AI-enabled flows were productized and scalable.

Key Use Cases:

Generate assessment questions using AI.

Manually create assessments from scratch, using client-provided or internally developed content.

Replace or remove low-quality or poor performing questions.

Constraints:

Deliver a functional experience quickly to support assessment contracts scheduled for Q3 - Q4.

Minimize changes to the partially built legacy experience; for speed, we layered new functionality on top of the existing experience without major redesign.

Process

(1)

Assessments prioritized (Roadmap buster)

(2)

Design Research & Discovery Interviews

(3)

Ideation (including AI prototyping)

(4)

Stakeholder Review & Feedback

(5)

Design Iteration based on findings

(6)

Handoff to engineering & Design Review

Process

(1) Assessments prioritized (Roadmap buster)

Due to contractual obligations and work done by engineering, this inititative was added to our roadmap after the quarter had already been planned. Additionally, this was high priority and needed to be completed quickly due to contractual obligations. Thus this work was expedited and some assumptions were made and short cuts taken in my process.

(2) Design Research & Interviews

Conducted research of the current state assessment experience & stakeholder workflow, reviewed the Python prototype created by engineering, spoke with cross-team engineers to understand work completed, technical constraints, and gaps, etc, in order to fully understand the scope & constraints

(3) Design Ideation (including AI prototyping)

tbd

Lovable ideation example screens

Process

(4) Stakeholder Review & Feedback (Ops, Content, Engineering)

tbd

(5) Design Iteration based on findings

tbd

(6) Handoff & Design Review

tbd

Outcomes & Lessons

What was Delivered

tbd

What I Learned

tbd

Outcomes & Lessons

Before

View Assessments (created manually by engineers) in the management platform

View question sections within Assessments (created manually by engineers) in the management platform

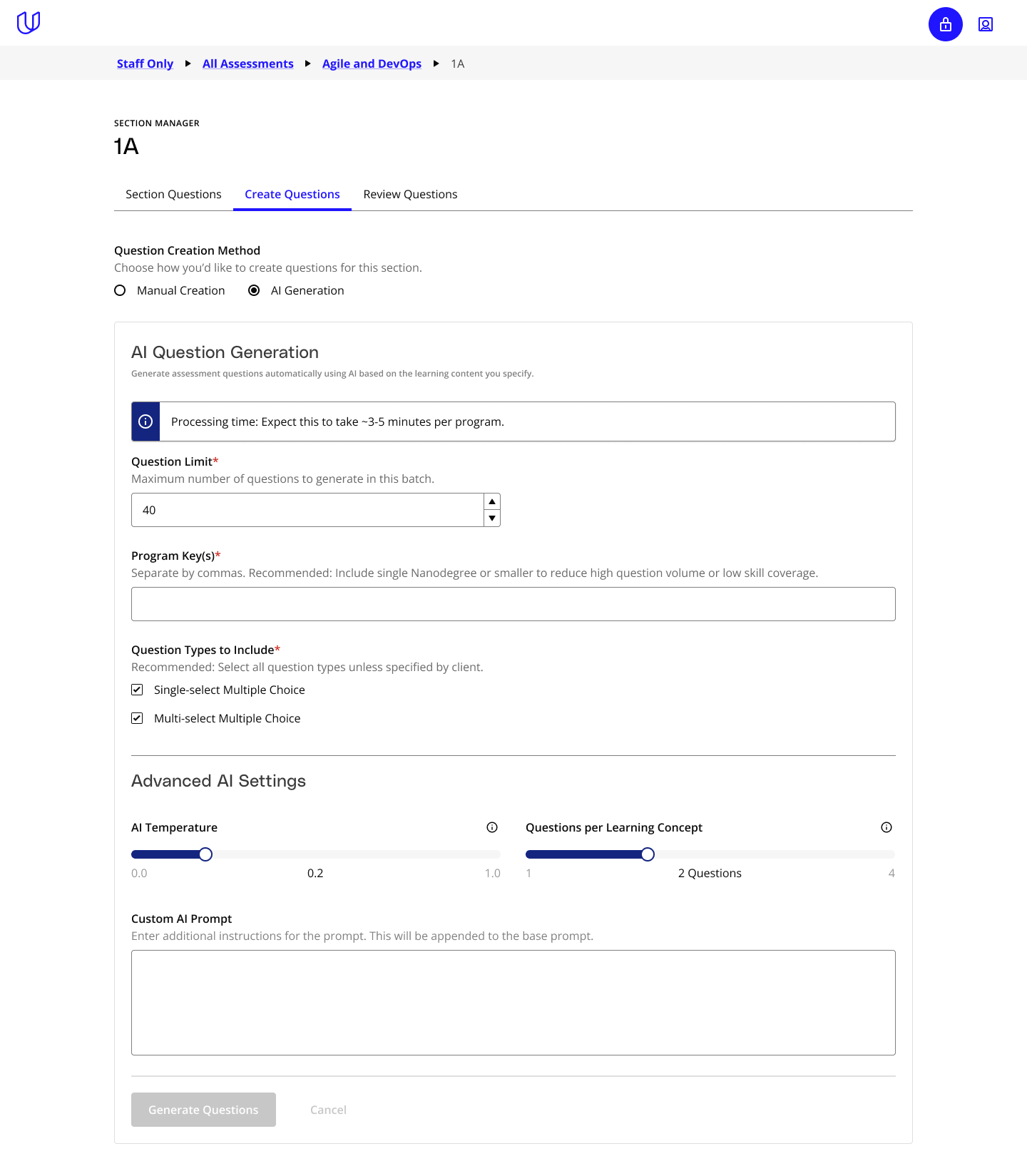

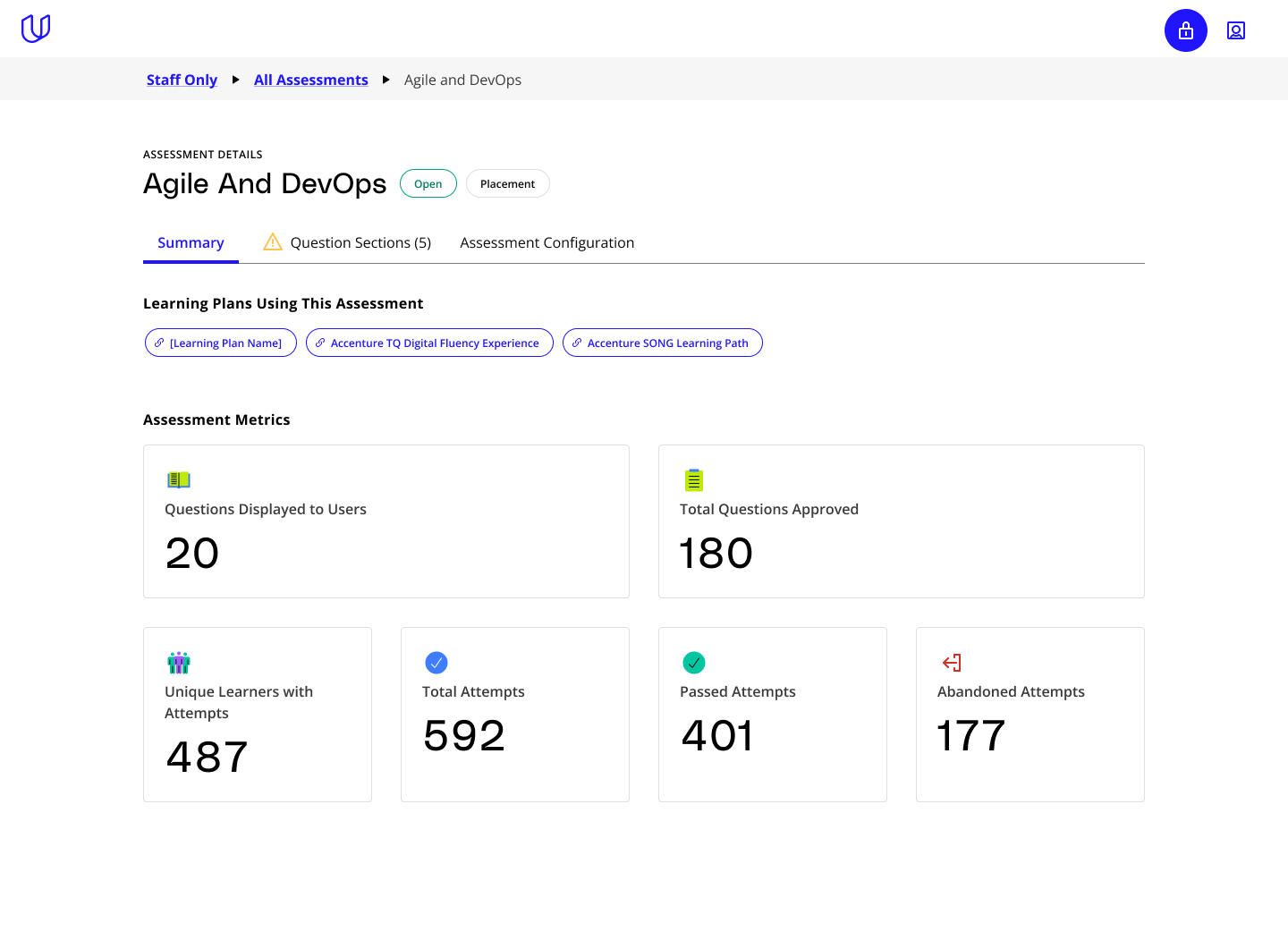

After

Fully-functional assessment CRUD (enabled for staff users)

AI-generated and manual question CRUD support

Introduce your brand

Take a minute to write an introduction that is short, sweet, and to the point.

Make it stand out.

It all begins with an idea. Maybe you want to launch a business. Maybe you want to turn a hobby into something more. Or maybe you have a creative project to share with the world. Whatever it is, the way you tell your story online can make all the difference.